Navigating the AI Studio: A Realistic Guide to Adopting MakeShot for Content Creation

Reviewed By: Khushi Choudhary

Reviewed By: Khushi Choudhary

The first time you open a professional creative dashboard, the feeling is rarely pure excitement. It is usually a mix of curiosity and a specific kind of paralysis. You have a concept in your head, and you have a suite of tools promising to build it, but the bridge between the two is not instant magic. It is a workflow.

For the past few years, the narrative around generative media has been dominated by viral clips and hyperbolic claims of “Hollywood in a box.” But for those of us actually doing the work—the strategists, the social managers, and the indie creators—the reality is more grounded. It involves trial, error, and learning how to speak the language of the machine.

This guide explores what it actually looks like to integrate a unified platform like MakeShot into a daily creative routine. We will look at the practicalities of using an AI Video Generator, the nuance of crafting consistent visuals, and how to manage expectations when you are just starting out.

The “All-in-One” Learning Curve

One of the biggest hurdles for beginners is “model fatigue.” You usually have to subscribe to one tool for images, another for video, and a third for audio. This fragmentation makes the learning curve steep because every interface has different quirks.

MakeShot attempts to solve this by centralizing the heavy hitters—Veo 3, Sora 2, and Nano Banana Pro—under one roof. However, having access to everything doesn’t mean you should use everything at once.

When you first log in, treat the platform as a sandbox, not a vending machine. The goal isn’t to generate a final Super Bowl ad on your first click. The goal is to understand the “personality” of each model.

For example, you might find that Sora 2 excels at cinematic camera movements but requires very specific prompting regarding lighting. Conversely, Veo 3 might handle photorealism differently. The advantage of a unified AI Video Generator environment is that you can run the same prompt through different engines to see which one aligns with your vision, without switching browser tabs or managing five different subscriptions.

Breaking Down the Visual Workflow

If you are moving from traditional stock photography or graphic design to an AI Image Creator, the biggest shock is often the lack of direct control. You cannot simply “nudge” an element to the left. You have to guide the AI to do it for you.

The Consistency Challenge

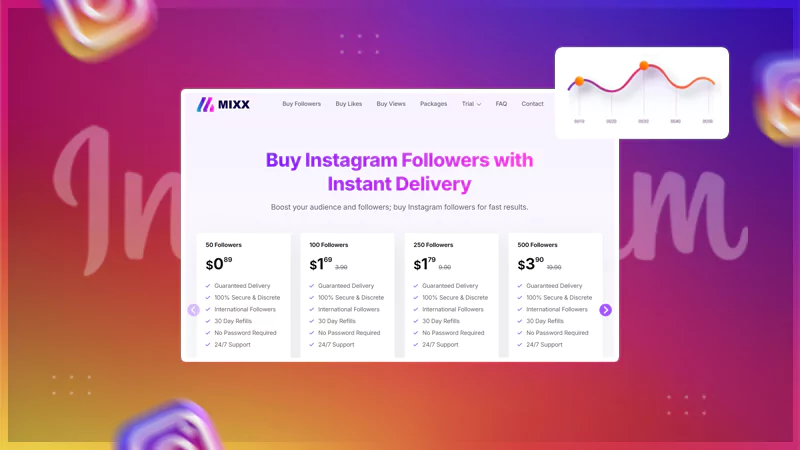

A common mistake beginners make is treating every image generation as a standalone event. This works for abstract art, but it fails for brand storytelling where you need the same character or product to appear in multiple shots.

This is where features like Nano Banana Pro’s reference support become critical. In my own testing of various workflows, I found that relying solely on text prompts leads to “drift”—where your main character looks different in every frame.

By utilizing the ability to upload up to four reference images in Nano Banana Pro, you anchor the AI Image Creator. You are essentially telling the model, “Innovate on the background, but keep these facial features or this product design rigid.”

Iterative Prompting

Don’t expect the first result to be perfect. A realistic workflow looks like this:

- Broad Concept: Generate four variations to test lighting and composition.

- Refinement: Pick the best seed and tweak the prompt adjectives.

- Reference Locking: Once the style is set, upload that output as a reference image for the next batch.

Moving Pictures: The Reality of AI Video

Transitioning from static images to video is where complexity compounds. An AI Video Generator introduces time and physics into the equation.

When I first started experimenting with these tools, I wasted a lot of credits trying to generate long, complex sequences in a single go. I quickly learned that AI models hallucinate when you ask them to do too much at once.

Short Clips vs. Long Stories

The most effective way to use models like Sora 2 is to think like an editor, not a director. Do not try to generate a 60-second commercial in one prompt. Instead, generate 4-second clips:

- An establishing shot of a city.

- A close-up of a character smiling.

- A product shot on a table.

You then stitch these together in your editor. This “modular” approach reduces the risk of the video morphing into something weird halfway through.

The Audio Equation

For a long time, AI videos were silent. You had to generate the clip, then go find stock music, then try to sync sound effects. It was a disjointed process that killed the illusion of reality.

This is where Veo 3 changes the workflow significantly. Because it supports native audio generation—creating dialogue, ambient noise, and sound effects with the video—it removes a massive step in post-production. However, this requires you to prompt for sound. You can’t just say “a car driving.” You have to specify “a car driving on gravel with crunching tires and distant city traffic.”

Managing Expectations and Costs

It is important to have a frank discussion about the investment required. While an AI Video Generator is cheaper than hiring a film crew, it is not free, and it is not instant.

The “Trial Tax”

When you are budgeting for a project using MakeShot, factor in a “trial tax.” If you need five usable video clips, you might need to generate fifteen to get there.

- Grok might give you a wild, creative abstract result that isn’t right for a corporate client.

- Seedream might be fast, but maybe lacks the texture you wanted.

This exploration consumes credits. Professional content creators view this not as waste, but as the cost of digital scouting. You are paying to explore visual possibilities that would be impossible to shoot physically.

Commercial Viability

One anxiety many new users have is ownership. “If I make this, can I actually sell it?”

In the early days of AI, this was a gray area. Platforms like MakeShot have solidified this by offering full commercial rights. Whether you are using Nano Banana Pro for product mockups or Sora 2 for a YouTube intro, the asset belongs to you. This clarity allows agencies to bill clients for these assets without legal fear.

A Practical Beginner’s Workflow

If you are ready to start your first project, here is a recommended roadmap that minimizes frustration.

Phase 1: The Asset Library

Before you touch the video tools, build your world. Use the AI Image Creator to generate your locations and characters.

- Use Nano Banana Pro to finalize the look of your subject.

- Save your best results. You will need these as reference points.

Phase 2: The Motion Test

Take your best static image and use it as a starting point for the AI Video Generator.

- Upload the image to Veo 3 or Sora 2.

- Ask the model to animate a simple movement (e.g., “slow zoom in,” “pan right,” “wind blowing hair”).

- Pro Tip: Keep the motion simple. High-speed action is harder for AI to render consistently than slow, cinematic movements.

Phase 3: The Assembly

Take your generated clips into your standard video editor. This is where the “human touch” happens. The AI provides the raw footage; you provide the pacing, the color grading, and the final narrative structure.

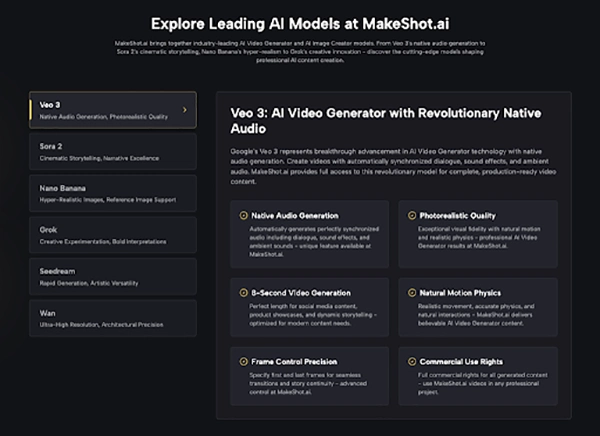

Comparing the Models: A Quick Reference

To help you decide where to spend your time (and credits), here is a breakdown of how the different engines within MakeShot generally fit into a workflow.

| Model | Best Use Case | Key Strength |

| Sora 2 | Cinematic Storytelling | Excellent for narrative flow and complex camera moves. |

| Veo 3 | Realistic Video & Audio | Native audio generation creates immersive, ready-to-use clips. |

| Nano Banana | Product & Character Images | Supports up to 4 reference images for high consistency. |

| Grok | Creative Ad Concepts | Good for brainstorming and stylistic variations. |

| Seedream | Rapid Prototyping | Fast generation when you need to iterate ideas quickly. |

The Future of Your Workflow

Adopting an AI Video Generator or AI Image Creator is not about replacing your creativity. It is about expanding your capacity to execute.

There will be moments of frustration where the AI refuses to understand what a “hand” looks like, or generates a video where the physics make no sense. That is part of the current landscape. But there will also be moments where Veo 3 perfectly captures the sound of rain hitting a window, or Nano Banana Pro renders a product shot that looks like it cost $5,000 to produce.

The key is to remain curious and patient. Start small, respect the learning curve, and use the unified power of MakeShot to find the workflow that fits your specific storytelling style. The tools are powerful, but they still need a pilot.